Deep Screen

Python, Unity

CS + Digital Art Thesis project

2023.9 - Current

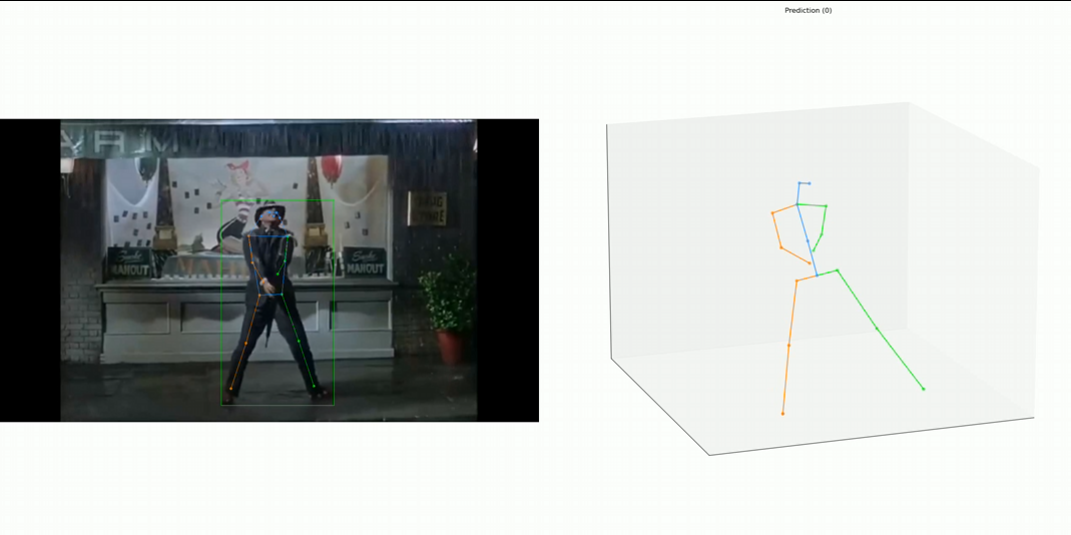

This project leverages computer vision (CV) and machine learning (ML) to analyze video and generate statistical data for applications like motion capture. Part of a broader initiative to study acting styles, it focuses on creating a pipeline to visualize CV/ML output in a 3D virtual environment, with special attention to visualizing uncertain or erroneous data.

Key challenges include copyright constraints, pipeline functionality, and future adaptability. Adhering to DMCA exemptions, videos from the Internet Archive are used under fair use, with real-time pose estimation excluded. The project transforms film-based pose estimation into 3D animations, prioritizing accuracy over real-time tracking and adapting MMPose to handle occlusions and multiple people effectively.

Our adaptable pipeline can integrate evolving algorithms, ensuring it remains relevant as technologies improve. In VR, this project uses visual effects to highlight data uncertainty, enriching the experience by integrating uncertainty into the art itself.

Demo Video:

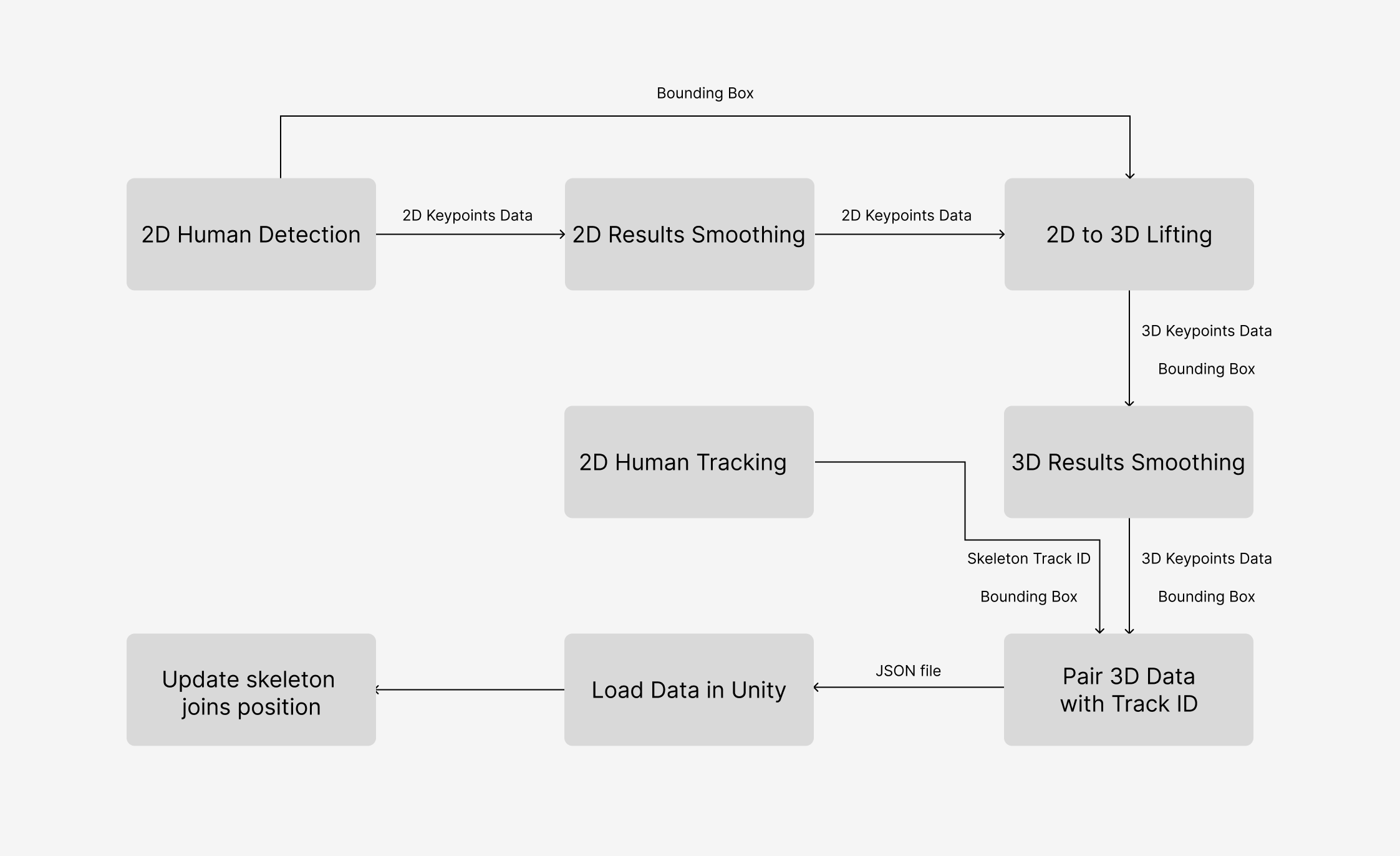

Pipeline Design:

Key Functions:

Pose Estimation:

Used MMPose to do 2D pose estimation and 2D to 3D Pose lifting.

Used MMPose to do 2D pose estimation and 2D to 3D Pose lifting.

Smooth:

Apply smooth filter between 2D and 3D stages to remove jittering.

Apply smooth filter between 2D and 3D stages to remove jittering.

Tracking Match:

Compare the bounding box in MMPose and MMTracking to match the skeleton and save it with tracking id

Unity Methods: